Hiring a Deep Learning Engineer can turn scattered experiments into production-grade AI that drives measurable value. The right person bridges research and engineering: they design datasets, train models, ship services, and monitor outcomes against business KPIs. This guide shows what to look for, how to structure an efficient hiring process, and where deep learning adds the most impact across product, operations, and growth.

Why Hire a Deep Learning Engineer

Business value

- Automation & accuracy: Replace manual decisions with models that scale consistently.

- Product differentiation: Personalisation, recommendations, and intelligent assistance baked into the core experience.

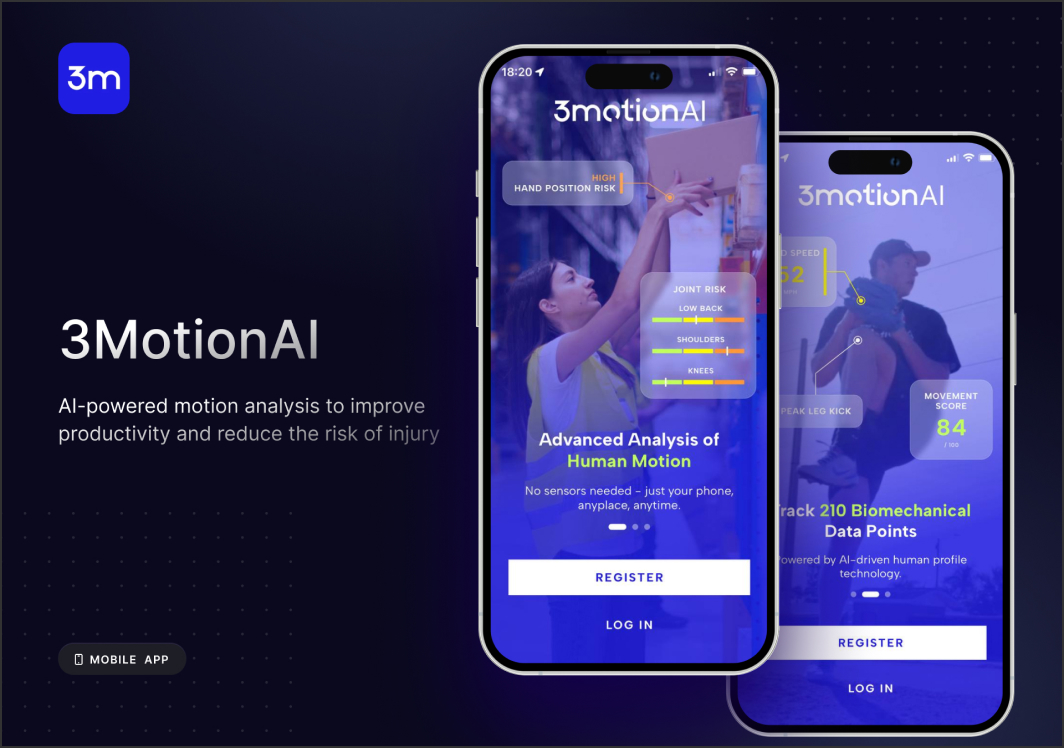

- Efficiency: Faster workflows via computer vision, speech/NLP, and multimodal models that remove repetitive work.

Common impact areas

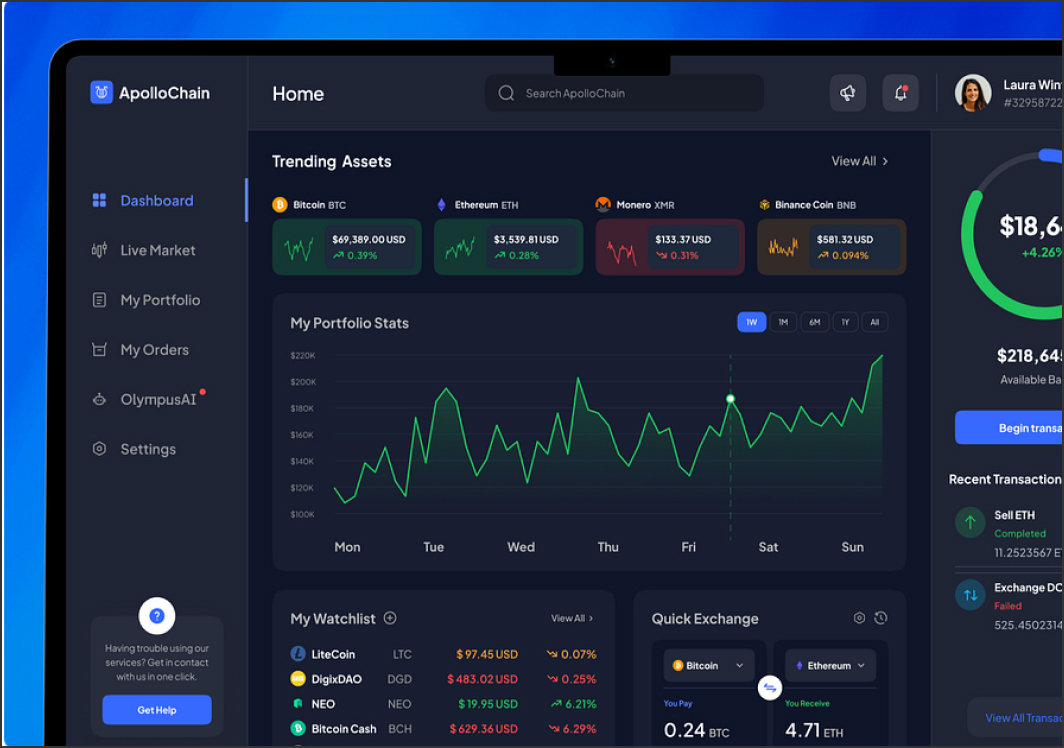

- Smart search and recommendations, churn prediction, fraud/risk signals.

- Document understanding (OCR, entity extraction), content moderation and summarisation.

- Vision pipelines for QA, defect detection, and logistics optimisation.

Core Skills to Look For

Technical stack

- Frameworks: PyTorch/TensorFlow, transformers, diffusion, RLHF/PEFT for LLMs.

- Data: Feature engineering, dataset curation, augmentation, synthetic data, labeling strategy.

- Training & eval: Efficient fine-tuning, hyperparameter search, metrics (F1, AUROC, BLEU, CIDEr), ablation studies.

- Deployment: ONNX/TensorRT, quantisation/distillation, GPU/CPU inference, streaming/batch APIs.

- MLOps: Experiment tracking, model registry, CI/CD, monitoring for drift and bias, rollback plans.

- Security & compliance: PII handling, privacy-by-design, guardrails, audit logs.

Seniority guide (signal checklist)

- Junior: solid Python, can fine-tune baseline models, follows eval protocols, needs guidance for prod.

- Mid: owns features end-to-end, deploys services, chooses metrics, writes tests and docs.

- Senior: designs architecture, optimises cost/latency, mentors others, aligns modelling with business KPIs.

Hiring Process (Lean & High-Signal)

Step-by-step flow

- Define outcomes — e.g., “Reduce support resolution time by 20% via NLP triage.”

- CV/portfolio screen — prioritise shipped systems, meaningful metrics, clear readmes; scan GitHub for tests and issue hygiene.

-

Technical interview (90 min)

- Problem framing & data strategy

- Modelling trade-offs (baseline → SOTA)

- Deployment, monitoring, and rollback

-

Practical task (4–6 hours cap)

- Build a small baseline (CV or NLP) with a README covering metrics, latency, and risk.

- Live review — pair on an extension: add guardrails, quantisation, or an eval harness.

Evaluation checklist

- Evidence of production deployments and measurable impact

- Sensible baselines before complex architectures

- Cost/latency thinking (batching, caching, quantisation)

- Monitoring plan (drift, bias, feedback loops)

- Documentation and handover quality

Typical Tech Choices & Best Practices

Reference toolkit

- Data & training: PyTorch Lightning, Hugging Face, Weights & Biases, Ray.

- Serving: FastAPI, Triton, TorchServe; vector DBs for retrieval-augmented workflows.

- Optimisation: FP8/INT8 quantisation, distillation, pruning; A/B tests for model changes.

Process tips

- Start with clearly scoped pilots; track a single KPI.

- Maintain an evaluation suite and golden datasets.

- Separate dev/test/prod with promotion and rollback controls.

30–60–90 Day Onboarding Plan

- 30 days: Audit data, ship a baseline model + eval harness, define latency/quality targets.

- 60 days: Deploy to a small traffic slice with monitoring and guardrails; collect feedback.

- 90 days: Optimise cost/latency, expand coverage, document runbooks, and hand over ownership to relevant teams.

Page Updated: 2025-10-06